libarchive is a library to handle tar, zip, cpio, pax, and many other archive formats. It uses a "walk through the archive" programming model, generally eschewing random access. Diving straight into it, we'll open a tar archive and list the files therein.

libarchive is a library to handle tar, zip, cpio, pax, and many other archive formats. It uses a "walk through the archive" programming model, generally eschewing random access. Diving straight into it, we'll open a tar archive and list the files therein.

#include <archive.h>

#include <archive_entry.h>

archive.h contains the APIs for working with archives, archive_entry.h deals with files within the archive.

struct archive* archive = archive_read_new();

assert(archive != NULL);

archive_read_new() allocates the data structure to read an archive. It is only allocated in memory, and does not open a file on disk or tape. Later we'll open the file and associate it with the data structure.

if ((archive_read_support_compression_all(archive) != ARCHIVE_OK) ||

(archive_read_support_format_all(archive) != ARCHIVE_OK))) {

archive_read_finish(archive);

// Error handling

}

There are a series of APIs like archive_read_support_compression_bzip2() or archive_read_support_format_tar() which can restrict the set of allowed formats, but here we set both the compression filter and format to anything libarchive supports. libarchive relies on external libraries for some things, such as libz for gzip, so the choices when building libarchive will restrict the formats it can support.

if (archive_read_open_filename(archive, "foobar.tgz", 8192) != ARCHIVE_OK) {

archive_read_finish(archive);

// Error handling

}

Here we've asked libarchive to open a file by name. There are also archive_read_open_FILE() and archive_read_open_fd() APIs to pass in a FILE* or file descriptor, respectively.

"8192" is the block size, which is used for a few archive formats like tar. Nonetheless libarchive does a good job of determining the real block size if it is incorrect. There is mention of removing the block size parameter in a future version of the library and relying solely on inferring it from the file.

struct archive_entry *entry;

while (archive_read_next_header(archive, &entry) == ARCHIVE_OK) {

printf("file = %s\n", archive_entry_pathname(entry));

}

This is the main point of the routine: iterate through the entries in the file printing filenames, skipping over the data in between. Many archive formats lack a complete table of contents, instead allowing appends to extend the archive ad hoc. archive_read_next_header() will often have to seek through the file to find the next entry. If the file is located on a remote filesystem, this can be slow.

archive_read_finish();

When we're done, archive_read_finish() frees the resources allocated by archive_read_new().

Reading File Contents

To extract a file from the archive you first iterate through archive_read_next_header() until you find one with the filename you want. I'll skip the code which does this as it is identical to that shown above, and start from the point where *entry points to the file we want.

size_t total = archive_entry_size(entry);

char buf[MY_BUF_SIZE];

size_t len_to_read = (total < sizeof(buf)) ? total : sizeof(buf);

ssize_t size = archive_read_data(archive, buf, len_to_read);

if (size <= 0) {

// Error handling

}

archive_read_data() reads the content of *entry into a buffer. There are several variations such as archive_read_data_block() which additionally takes an offset, and archive_read_extract() which reads data and writes it to a file on disk.

Writing Files

Writing to an archive uses a similar set of APIs as reading.

struct archive* archive = archive_write_new();

assert(archive != NULL);

archive_write_new() allocates the data structure to track an archive. It does not create anything on disk

if ((archive_write_set_compression_gzip(archive) != ARCHIVE_OK) ||

(archive_write_set_format_ustar(archive) != ARCHIVE_OK) ||

(archive_write_open_filename(archive, "foobar.tgz") != ARCHIVE_OK)) {

// Error handling

}

Where the read APIs allow "all" as a choice, writing an entry requires you to pick a format. Here I've chosen a tar.gz, and written it to foobar.tgz.

struct archive_entry* entry = archive_entry_new();

assert(entry != NULL);

struct timespec ts;

assert(clock_gettime(CLOCK_REALTIME, &ts) == 0);

archive_entry_set_pathname(entry, filename);

archive_entry_set_size(entry, contents_len);

archive_entry_set_filetype(entry, AE_IFREG);

archive_entry_set_perm(entry, 0444);

archive_entry_set_atime(entry, ts.tv_sec, ts.tv_nsec);

archive_entry_set_birthtime(entry, ts.tv_sec, ts.tv_nsec);

archive_entry_set_ctime(entry, ts.tv_sec, ts.tv_nsec);

archive_entry_set_mtime(entry, ts.tv_sec, ts.tv_nsec);

Here we create the metadata for a file in the archive, populating it with permissions and timestamps. Not all archive formats support all of these timestamps, but it seems a good idea to populate them in case a different format is chosen later.

int rc = archive_write_header(archive, entry);

archive_entry_free(entry);

entry = NULL;

if (ARCHIVE_OK != rc) {

// Error handling

}

Once the metadata has been written to the archive, the archive_entry is no longer needed.

size_t written = archive_write_data(archive, contents, contents_len);

if (written != contents_len) {

// Error handling

}

archive_write_finish(archive);

Finally, we write the data. contents is a pointer to a buffer in memory, contents_len is its length in bytes. archive_write_data() can be called multiple times, each will append its contents at the end of the last. There is no random access API with an offset parameter.

Closing Thoughts

libarchive APIs are designed to allow use with either disk or tape. There are no APIs to overwrite bytes in the middle of a file, because tape drives cannot do that without corrupting adjacent data. There is an alternate set of APIs designed for disk in archive_read_disk and archive_write_disk, though I see relatively little difference in them other than accessing the uid/gid of the archive itself.

I hope you find this useful.

One thing this blog has not evolved into is a direct source of income. Initially there were Adsense units on the page, but the math just doesn't work for a site like this. As each technical article can take quite a while to research and polish I found myself computing the hourly rate for time spent writing. This is a highly negative train of thought. Advertising works well for a variety of web sites, but not this one. I removed the ads in late 2008.

One thing this blog has not evolved into is a direct source of income. Initially there were Adsense units on the page, but the math just doesn't work for a site like this. As each technical article can take quite a while to research and polish I found myself computing the hourly rate for time spent writing. This is a highly negative train of thought. Advertising works well for a variety of web sites, but not this one. I removed the ads in late 2008.

Users who have installed the OTF browser extension see the web bar on every site they visit. Site owners can also install the web bar on their pages, making it visible to all visitors whether they use the browser extension or not. Hovering over the pictures in the web bar shows a list of recently shared links. My list of shared links is relatively tame. Its not difficult to imagine links to WoW Gold sales, or porn sites, or any of the other innumerable schemes which spam is used to peddle. The potential for mischief is there.

Users who have installed the OTF browser extension see the web bar on every site they visit. Site owners can also install the web bar on their pages, making it visible to all visitors whether they use the browser extension or not. Hovering over the pictures in the web bar shows a list of recently shared links. My list of shared links is relatively tame. Its not difficult to imagine links to WoW Gold sales, or porn sites, or any of the other innumerable schemes which spam is used to peddle. The potential for mischief is there.![I was going to propose this as the official name for Ununquadium[114], but decided that would cross the lameness threshold Toddlergooium periodic table entry](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgpHY4_qV68OKDSikApP2lRnHoFZGyCIRfosP0zhAAOvvsf_ZwXzLsY1c_bRwKdDuBGOqZ29WYaYP2u60IeVXw9FTyMFzcKMp0MSnim_Q0jR5oCJjU5BPaU9mBQ_FQ-uKpwe5fqPsMzrjsq/s320/Toddlergooium.png)

Wait: does that sound weird, based on your experience? You're right, I made it all up. We're not organized as one enormous group, we're grouped into smaller teams like everybody else. Yet to a degree, the larger group has to be able to coordinate between every single person, every day. How is this accomplished?

Wait: does that sound weird, based on your experience? You're right, I made it all up. We're not organized as one enormous group, we're grouped into smaller teams like everybody else. Yet to a degree, the larger group has to be able to coordinate between every single person, every day. How is this accomplished?

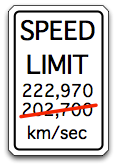

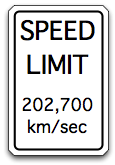

Also I'll state again: the speed of light in fiber only matters for wide area links. The propagation delay in 100 meters of fiber is dwarfed by queueing and software delays, to the point of insignificance. In fact if we could reduce the cost or power consumption of short range lasers by making the speed of light even slower in the fiber they drive, that would be a good tradeoff.

Also I'll state again: the speed of light in fiber only matters for wide area links. The propagation delay in 100 meters of fiber is dwarfed by queueing and software delays, to the point of insignificance. In fact if we could reduce the cost or power consumption of short range lasers by making the speed of light even slower in the fiber they drive, that would be a good tradeoff.

In January

In January

Fiber optic strands have a central core of material with a high refractive index surrounded by a jacket of material with a slightly lower index. The ratio of the two is set to cause total internal reflection, where the light is confined to the central region and won't diffuse out into the cladding.

Fiber optic strands have a central core of material with a high refractive index surrounded by a jacket of material with a slightly lower index. The ratio of the two is set to cause total internal reflection, where the light is confined to the central region and won't diffuse out into the cladding.

As it was located near the center of the US auto industry, there was an extensive automotive program at the University of Michigan (Ann Arbor) with an assortment of guest speakers from the Big Three. I went to several presentations that made quite an impression. One of them was about self-driving cars... in 1991.

As it was located near the center of the US auto industry, there was an extensive automotive program at the University of Michigan (Ann Arbor) with an assortment of guest speakers from the Big Three. I went to several presentations that made quite an impression. One of them was about self-driving cars... in 1991.