"Soft Error" is a euphemism in the semiconductor industry for "the silicon did the wrong thing." Soft errors can occur when a circuit is infused with a sudden burst of energy from an external source, for example when it is hit by a high energy subatomic particle or by radiation.

Alpha particle strike - two protons plus two electrons, emitted when a heavy radioactive element decays into a lighter element. Alpha particles are so large that the chip packaging will normally block them, they are only a problem when something inside the package undergoes radioactive decay.

Cosmic ray strike - a high energy neutron (or other particle) emitted by the Sun. These particles are gradually absorbed by the Earth's atmosphere, so they are more of a problem in orbit and at high altitude. Cosmic rays can directly impact the silicon, or can hit a nearby atom and throw off neutrons which in turn cause a soft error.

Beta particle strike - an electron, emitted when a neutron is converted into a proton + electron + antineutrino. Beta particles rarely hold enough energy to affect current silicon technology, alpha and cosmic ray strikes are more of a problem.

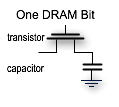

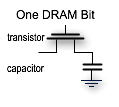

Soft errors are usually discussed in the context of DRAM, where the problem was initially noticed. DRAM consists of a capacitor to store the bit, with a transistor to keep the capacitor charge stable. A capacitor is an energy storage circuit: it stores voltage. A particle strike on the capacitor will impart a large amount of energy, which can spontaneously change it to a 1 or, more rarely, overload its capacity such that the energy quickly escapes into the substrate and leaves the bit as a 0.

Soft errors are usually discussed in the context of DRAM, where the problem was initially noticed. DRAM consists of a capacitor to store the bit, with a transistor to keep the capacitor charge stable. A capacitor is an energy storage circuit: it stores voltage. A particle strike on the capacitor will impart a large amount of energy, which can spontaneously change it to a 1 or, more rarely, overload its capacity such that the energy quickly escapes into the substrate and leaves the bit as a 0.

Some quick searching will turn up a few facts about soft errors:

- Soft errors were first noted in the 1970s.

- The primary cause was the use of slightly radioactive isotopes in the chip packaging, such as lead (Pb-212).

- Materials in chip packaging are now carefully screened to substantially eliminate radioactivity.

- Soft errors are now very rare and mostly caused by cosmic rays.

We'll come back to these later.

Beyond DRAM

Soft errors are not confined to DRAM alone. Any circuit will glitch if hit by a sufficiently energetic particle - whether DRAM, SRAM, or a logic element. DRAM began to be affected by soft errors when the energy stored in the capacitor shrunk to be on the order of the energy induced by an alpha particle. SRAM was not initially affected because its cells are actively driven via 6 transistors, whose energy level is considerably higher. Nonetheless as silicon feature sizes have shrunk it is now quite possible for SRAM to suffer a soft error. Soft errors in logic elements are somewhat less noticeable in that they will correct themselves on the next clock cycle, while an error in a storage element will persist until it is rewritten.

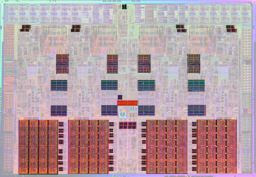

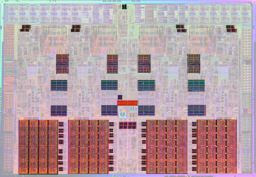

Modern CPUs include a great deal of SRAM on the die, comprising the caches, TLBs, reorder buffers, and numerous other uses. The image shown here is Intel's quad core Nehalem die, with the SRAM areas highlighted and logic deemphasized (both based on my best guesses). SRAM is a significant fraction of the die. Many, many other ASIC and CPU designs contain similar or higher fractions of SRAM.

Modern CPUs include a great deal of SRAM on the die, comprising the caches, TLBs, reorder buffers, and numerous other uses. The image shown here is Intel's quad core Nehalem die, with the SRAM areas highlighted and logic deemphasized (both based on my best guesses). SRAM is a significant fraction of the die. Many, many other ASIC and CPU designs contain similar or higher fractions of SRAM.

What impact can a bitflip in the SRAM have? Consider that there is just one bit difference between the following two instruction opcodes. A bitflip can make the software come up with results which should be impossible.

ADD R1,1

ADD R1,32769

Even more bizarrely a bitflip could change some random instruction into a memory reference, such as a load or store. As the register being dereferenced would likely not contain a valid pointer, the process would segfault for inexplicable reasons.

To prevent this problem Intel and all major CPU vendors protect their caches and other on-chip memories using Error Correcting Codes, but many ASIC designs do not. They might implement parity, but on-chip ASIC memories commonly have no error checking at all. A soft error will simply corrupt whatever was in the SRAM, which will only be noticed if it causes the ASIC to misbehave in some perceptible way.

What happens if a particle strike causes the hardware to misbehave, or to get the wrong answer? Usually, we blame the software. It must be some weird bug.

Back To The Future

Returning to the earlier list of common facts about soft errors, lets focus on the last two.

- Materials in chip packaging are now carefully screened to substantially eliminate radioactivity.

- Soft errors are now very rare and mostly caused by cosmic rays.

There are many different materials used in a finished chip, beyond the silicon die and the gold wires connecting to its pins. The chip package is plastic or ceramic, which is composed of a host of different elements including boron. Solder bonds the wires to the pins. Solder used to be mostly lead, later tin, and might now be a polymer. There are heat spreading compounds and shock absorption goo, which are often organic polymers. Some of these materials are naturally slightly radioactive. For example, in nature Lead (Pb-208) contains traces of Pollonium (Po212) which will emit alpha particles and decay into Pb-208. Similarly boron-10 is more prone to fission than boron-11 - or so they tell me, I've no idea why.

Modern chip packaging uses strained versions of these materials, to reduce the level of undesirable isotopes and leave purified inert material behind. This is an expensive process. Each new generation of silicon imposes more stringent requirements, making them even more costly. It is crucial that the correct materials be used... and this is where human error can creep in.

Using the wrong packaging materials increases alpha emissions to the point where the silicon will experience an unacceptable soft error rate. Yet to control costs it is also important to not overshoot the alpha emission requirements by using a more expensive material than necessary. So each chip design may use a different mix of materials depending on its process technology, die size, and the amount of SRAM it contains. The manufacturer will have checks in place to ensure the correct materials are used, but mistakes can occur. Sometimes a batch of chips is produced which suffers an unusually high soft error rate. When this happens the manufacturer is generally loathe to admit it, and will quietly replace the chips with a corrected batch. One recent case where the problem was too widespread to cover up was with the cache of the Ultrasparc-II CPU from Sun Microsystems, see point #5 of an Actel FAQ on the topic for more details.

The Moral of the Story

If you are working on low level software for a chip and run into bizarre errors, you should suspect a software problem first. Soft errors really are rare, and the manufacturing screwups described above are very uncommon. If the problem is repeatable, even if it has only happened twice, it is not a soft error. Particle strikes are too random for that. However if you keep running into different symptoms where you think "but that is impossible..." you should consider the possibility that the problem really is impossible and was introduced by a bitflip. You should start checking whether the problems are confined to a particular batch of parts, or only produced in a certain range of dates. If so, it could be that batch of parts has a problem with the packaging materials.

If you are working on low level software for a chip and run into bizarre errors, you should suspect a software problem first. Soft errors really are rare, and the manufacturing screwups described above are very uncommon. If the problem is repeatable, even if it has only happened twice, it is not a soft error. Particle strikes are too random for that. However if you keep running into different symptoms where you think "but that is impossible..." you should consider the possibility that the problem really is impossible and was introduced by a bitflip. You should start checking whether the problems are confined to a particular batch of parts, or only produced in a certain range of dates. If so, it could be that batch of parts has a problem with the packaging materials.

Other resources

While researching this post I came across a few additional sources of information which I found fascinating, but did not have a good place to link to them. They are presented here for your edification and bemusement.

- An analysis of the high soft error rate of the ASC Q supercomputer at LLNL. These errors were cosmic ray induced, not due to a badly packaged batch of chips. The part of the system in question did not implement ECC to correct errors, only parity to detect them and crash.

- Cypress Semiconductor published a book to explain soft errors to their customers.

- Fujitsu has a simulator to predict soft error rates, called NISES. The linked PDF is mostly in Japanese, but the images are fascinating and very illustrative.

This post was many years in the making.

Soft errors are usually discussed in the context of DRAM, where the problem was initially noticed. DRAM consists of a capacitor to store the bit, with a transistor to keep the capacitor charge stable. A capacitor is an energy storage circuit: it stores voltage. A particle strike on the capacitor will impart a large amount of energy, which can spontaneously change it to a 1 or, more rarely, overload its capacity such that the energy quickly escapes into the substrate and leaves the bit as a 0.

Soft errors are usually discussed in the context of DRAM, where the problem was initially noticed. DRAM consists of a capacitor to store the bit, with a transistor to keep the capacitor charge stable. A capacitor is an energy storage circuit: it stores voltage. A particle strike on the capacitor will impart a large amount of energy, which can spontaneously change it to a 1 or, more rarely, overload its capacity such that the energy quickly escapes into the substrate and leaves the bit as a 0.

Modern CPUs include a great deal of SRAM on the die, comprising the caches, TLBs, reorder buffers, and numerous other uses. The image shown here is Intel's quad core

Modern CPUs include a great deal of SRAM on the die, comprising the caches, TLBs, reorder buffers, and numerous other uses. The image shown here is Intel's quad core