This is a series of Software Engineering Maxims Which May or May Not Be True, developed over the last few years of working at Google. Your mileage may vary. Use only as directed. Past performance is not a predictor of future results. Etc.

Maxim #1: Small teams are bigger than large teams

In my mind, the ideal size for a software team is seven engineers. It does not have to be exactly seven: six is fine, eight is fine, but the further the team gets from the ideal the harder it is to get things done. Three people isn’t enough and limits impact, fourteen is too many to effectively coordinate and communicate amongst.

Organizing larger projects becomes an exercise in modularizing the system to allow teams of about seven people to own the delivery of a well-defined piece of the overall product. The largest parts of the system will end up with clusters of teams working on different aspects of the system.

Maxim #2: Enthusiasm improves productivity.

By far the best way to improve engineering productivity is to have people working on something which they are genuinely enthused about. It is beneficial in many ways:

- the quality of the product will benefit from the care and attention

- people don’t let themselves get blocked by something else when they are eager to see the end result

- they’ll come up with ways to make the product even better, by way of their own resourcefulness

- people are simply happier, which has numerous benefits in morale and working environment.

There are usually way more tasks on the project wish list than can realistically be delivered. Some of those tasks will be more important than others, but it is rarely the case that there is a strict priority order in the task list. More often we have broad buckets of tasks:

- crucial, can’t have the product without it

- nice to have

- won’t do yet: too much crazy, or get to it eventually, or something

The crucial tasks have to be done, even the ones which no-one particularly wants to do.

In my mind, when selecting from the (lengthy) list of nice-to-have tasks, the enthusiasm of the engineering team should be a factor in the choices. The team will deliver more if they can work on things they are genuinely interested in doing.

Maxim #3: Project plans should have top-down and bottom-up elements

It is possible for a team to work entirely from a task list, where Product Management and the Leadership add stuff and the team marks things off as they are completed. This is not a great way to work, but it is possible.

It is better if the team spend some fraction of their time on tasks which germinated from within the team - not merely 20% time, a portion of regular work should be on tasks which the team itself came up with.

- The team tends to generate immediately practical ideas, things which build upon the product as it exists today and provide useful extensions.

- It is good for morale.

- It is good for careers. Showing initiative and technical leadership is good for a software engineer.

Maxim #4: Bricking the fleet is bad for business

Activities with a risk of irreparable consequences deserve more care. This sounds obvious, like something which no-one would ever disagree with, but in the day-to-day engineering work those tasks won’t look like something which require that extra level of care. Instead they will look like something which has been running for years and never failed, something which fades into the background and can be safely ignored because it is so reliable.

Calls to add this risk will not be phrased as "be cavalier about something which can ruin us." It will be phrased as increasing velocity, or lowering cost, or not being stuck in doing things the old way - all of which might be true, it just needs more care and attention in changing it.

Maxim #5: There is an ideal rate of breakage: no more, no less

Breaking things too often is a sign of trying to do too much too quickly, and either excessively dividing attention or not allowing time for proper care to be taken.

Not breaking things often enough is a sign of the opposite problem: not pushing hard enough.

I’m sure it is theoretically possible for a team to move at an absolutely optimal speed such that they maximize their results without ever breaking anything, but I’ve no idea how to achieve it. The next best thing is to strive for just the right amount of breakage: not too much, not too little.

Maxim #6: It’s a marathon, not a sprint

"Launch and iterate" is a catchy phrase, but often turns into excuses to launch something sub-par and then never getting around to improving it.

Yet there is a real advantage to being in a market for the long term, launching a product and improving it. Customer happiness is earned over time, not all at once with a big launch.

- This means structuring teams for sustained effort, not big product pushes.

- It means triaging bugs: not everything will get fixed right away, but should at least be looked at to assess relative priority.

- It means really thinking about how to support the product in the field.

- It means not running projects in a way which burn people out.

Maxim #7: The service is the product

The product is not the code. The product is not the specific feature we launched last week, nor the big thing we’re working on launching next week.

The product is the Service. The Product which customers care about is that they can get on the Internet and do what they need to do, that they can turn on the TV and have it work, that they can make phone calls, whatever it is they set out to do.

Maxim #8: Money is not the only motivator

A monetary bonus is one tool available for managers to reward good work. It is not the only tool, and is not necessarily the best tool for all situations.

For example, to encourage SWEs to write automated system tests we created the Yakthulhu of Testing. It is a tiny Cthulhu made from the hair of shaved yaks (*). A Yakthulhu can be obtained by checking in one’s first automated test to run against the product.

(*) It really is made from yak hair. Yak hair yarn is a thing which one can buy. Disappointingly though, they do not shave the yaks. They comb the yaks.

Maxim #9: Evolve systems as a series of incremental changes

There is substantial value in code which has seen action in the field. It contains a series of small and large decisions, fixes, and responses which made the system better over time. Generally these decisions are not recorded as a list of lessons learned to be applied to a rewrite or to the next system.

Whenever possible, systems should evolve as a series of incremental changes to take it from where it is to where we want it to be. Doing this incrementally has several advantages:

- benefits are delivered to customers much earlier, as the earliest pieces to be completed don’t have to wait for the later pieces before deployment.

- there is no stagnant period in the field after work on the old system is stopped but before the new system is ready.

- once the system is close enough to where we want it to be that other stuff moves higher on the list of priorities, we can stop. We don’t have to push on to finish rewriting all of it.

Maxim #10: Risk is multiplicative

There is a school of thought that when there are multiple large projects going on, and there is some relation between them, that they should be tied together and made dependent upon each other. The arguments for doing so are often:

- "We’re going to pay careful attention to those projects, making them one project means we’ll be able to track them more effectively."

- "There was going to be duplication of effort, we can share implementation of the common systems."

- "We can better manage the team if we have more people available to be redirected to the pieces which need more help."

The trouble with this is that it glosses over the fundamental decision being made: nothing can ship until all of it ships. Combining risks makes a single, bigger risk out of the multiple smaller risks.

Maxim #11: Don’t shoot the monitoring

There is a peculiar dynamic when systems contain a mix of modules with very good monitoring along with modules with very poor monitoring; the modules with good monitoring report all of the errors.

The peculiarity becomes damaging if the result is to have all of the bugs filed against the components with good monitoring. It makes it look like those modules are full of bugs, when the reality is likely the opposite.

Maxim #12: No postmortem prior to mortem

There are going to be emergencies. It happens, despite our best efforts to anticipate risks. When it happens, we go into damage control mode to resolve it.

People not involved in handling the emergency will begin to ask about a postmortem almost immediately, even before the problem is resolved. It is important to not begin writing the postmortem until the problem has been mitigated. Doing so turns a unified crisis response into a hotbed of fingerpointing and intrigue. Even in a culture of blameless postmortems, it is difficult to avoid the harmful effects of the hints of blame while writing that blameless postmortem.

It is fine, even crucial, to save information for later drafting of the postmortem. IRC logs, lists of bugs/CLs/etc, will all be needed eventually. Just don’t start a draft of a postmortem while still antemortem.

Maxim #13: Cadence trumps mechanism

We tend to focus a lot on mechanisms in software engineering as a way to increase velocity or productivity. We reduce the friction of releasing software, or we automate something, and we expect that this will result in more of the activity which we want to optimize.

Inertia is a powerful thing. A product at rest will tend to stay at rest, a product in motion will tend to stay in motion. The best way to release a bunch of software is to release a bunch of software, by setting a cadence and sticking to it. People get used to a cadence and it becomes self-reinforcing. Making something easier may or may not result in better velocity, making it more regular almost always does.

Maxim #14: Churn wastes more than time

Project plans change. It happens.

When plans change too often, or when a crucial plan is suddenly cancelled and destaffed, we burn more than just the time which was spent on the old plan. We burn confidence in the next plan. People don’t commit as readily and don’t put their best effort into it until they’re pretty sure the new plan will stick.

In the worst case, this becomes self-reinforcing. New plans fail because of the lack of effort engendered by the failure of previous plans.

Maxim #15: Sometimes the hard way is the right way

For any given task, there is often some person somewhere who has done it before and knows exactly what to do and how to do it. For things which are likely to be done once in a project and never repeated, relying on that person (either to do it or to provide exactly what to do step by step) can significantly speed things up.

For things which are likely to be repeated, or refined, or iterated upon, it can be better to not rely on that one expert. Learning by doing results in a much deeper understanding than just following directions. For an area which is core to the product or will be extended upon repeatedly, the deeper understanding is valuable, and is worth acquiring even if it takes longer.

Maxim #16: Spreading knowledge makes it thicker

Pigeonholing is rampant in software engineering, engineers who have become experts in a particular area and always end up being assigned tasks in that area.

There are occasions where that is optimal, where becoming a subject matter expert takes substantial time and effort, but these situations are rare. In most cases it is not the expense of becoming an expert that keeps an engineer doing similar work over and over, it is just complacency.

Areas of the product where the team needs to continue to expend effort over a long time period should move around to different members of the team. Multiple people familiar with an area will reinforce each other. Additionally, teaching the next person is a very effective way to get a better understanding for oneself.

Maxim #17: Software Managers must code

When one first transitions from being an individual contributor software engineer to being a manager, there is a decision to be made: whether to stop doing work as an individual contributor and focus entirely on the new role in guiding the team, or to keep doing IC work as well as management.

There are definitely incentives to focus entirely on management: one can have a much bigger impact via effective use of a team than by one’s own effort alone. When a new manager makes that choice, they get a couple of really good years. They have more time to plan, more time to strategize, and the team carries it all out.

The danger in this path comes later: one forgets how hard things really are. One forgets how long things take. The plans and strategies become less effective because they no longer reflect reality.

Software managers need to continue do engineering work, at least a little, to stay grounded in reality.

Maxim #18: Manage without a net

Managers and Tech Leads cannot depend on escalation. We sometimes believe that the layers of management above us exist in order to handle things which the lower layers are having trouble with. In reality, those upper layers have their own goals and priorities, and they generally do not include handling things bubbling up from below.

Do not rely on Deus Ex Magisterio from above, organizations do not work that way.

Maxim #19: Goodwill can be spent. Spend wisely.

Doing good work accumulates goodwill. It is helpful to have a pile of goodwill, it tends to make interactions smoother and generally makes things easier.

Nonetheless, it is possible to spend goodwill on something important: to redirect a path, to right a wrong, etc. Sometimes spending goodwill is the right thing to do. Don’t spend it frivolously.

Maxim #20: Everyone finds their own experience most compelling

"We should do A. I did that on my last project, and it was great."

"No, we should do B. I did that on my last project, and it was great."

Comparing experiences rarely builds consensus, everyone believes their own experiences to be the most convincing. Comparing experiences really only works when there is a power imbalance, when the person advocating A or B also happens to be a Decider.

In most cases, simply being aware of this phenomena is sufficient to avoid damaging disagreements. The team needs to find other ways to pick their path forward, such as shared experiences or quantitative measurements, not just firmly held belief.

Its most pernicious problem for data networking was in dealing with congestion. There was no mechanism for flow control, because ATM evolved out of a circuit switched world with predictable traffic patterns. Congestive problems come when you try to switch packets and deal with bursty traffic. In an ATM network the loss of a single cell would render the entire packet unusable, but the network would be further congested carrying the remaining cells of that packet's corpse.

Its most pernicious problem for data networking was in dealing with congestion. There was no mechanism for flow control, because ATM evolved out of a circuit switched world with predictable traffic patterns. Congestive problems come when you try to switch packets and deal with bursty traffic. In an ATM network the loss of a single cell would render the entire packet unusable, but the network would be further congested carrying the remaining cells of that packet's corpse. Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the

Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the  Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line.

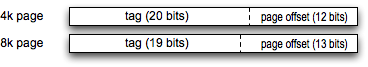

Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line. Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

Developers work N hours per day, where N varies considerably, but the entire time is not spent writing code. We have interruptions, from meetings to questions from colleagues to physical necessities. The amount of time spent actually developing can be a small slice of one's day. More pressingly, we don't just sit down and immediately start pounding out program statements. There is a warm up period, to recall to mind the details of what is being worked on. Reviewing notes, re-reading code produced in the previous session, and so forth get one back into the mode of being productive. Interruptions which disrupt this productive mode have far greater impact than the few minutes it takes to answer the question.

Developers work N hours per day, where N varies considerably, but the entire time is not spent writing code. We have interruptions, from meetings to questions from colleagues to physical necessities. The amount of time spent actually developing can be a small slice of one's day. More pressingly, we don't just sit down and immediately start pounding out program statements. There is a warm up period, to recall to mind the details of what is being worked on. Reviewing notes, re-reading code produced in the previous session, and so forth get one back into the mode of being productive. Interruptions which disrupt this productive mode have far greater impact than the few minutes it takes to answer the question.

Wait: does that sound weird, based on your experience? You're right, I made it all up. We're not organized as one enormous group, we're grouped into smaller teams like everybody else. Yet to a degree, the larger group has to be able to coordinate between every single person, every day. How is this accomplished?

Wait: does that sound weird, based on your experience? You're right, I made it all up. We're not organized as one enormous group, we're grouped into smaller teams like everybody else. Yet to a degree, the larger group has to be able to coordinate between every single person, every day. How is this accomplished?