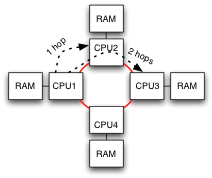

Non Uniform Memory Access is common in modern x86 servers. RAM is connected to each CPU, which connect to each other. Any CPU can access any location in RAM, but will incur additional latency if there are multiple hops along the way. This is the non-uniform part: some portions of memory take longer to access than others.

Non Uniform Memory Access is common in modern x86 servers. RAM is connected to each CPU, which connect to each other. Any CPU can access any location in RAM, but will incur additional latency if there are multiple hops along the way. This is the non-uniform part: some portions of memory take longer to access than others.

Yet the NUMA we use today is NUMA in the small. In the 1990s NUMA aimed to make very, very large systems commonplace. There were many levels of bridging, each adding yet more latency. RAM attached to the local CPU was fast, RAM for other CPUs on the same board was somewhat slower. RAM on boards in the same local grouping took longer still, while RAM on the other side of the chassis took forever. Nonetheless this was considered to be a huge advancement in system design because it allowed the software to access vast amounts of memory in the system with a uniform programming interface... except for performance.

Operating system schedulers which had previously run any task on any available CPU would randomly exhibit extremely bad behavior: a process running on distant combinations of CPU and RAM would run an order of magnitude slower. NUMA meant that all RAM was equal, but some was more equal than others. Operating systems desperately added notions of RAM affinity to go along with CPU and cache affinity, but reliably good performance was difficult to achieve.

As an industry we concluded that NUMA in moderation is good, but too much NUMA is bad. Those enormous NUMA systems have mostly lost out to smaller servers clustered together, where each server uses a bit of NUMA to improve its own scalability. The big jump in latency to get to another server is accompanied by a change in API, to use the network instead of memory pointers.

A Segue to Web Applications

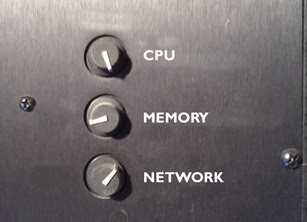

Modern web applications can make tradeoffs between CPU utilization, memory footprint, and network bandwidth. Increase the amount of memory available for caching, and reduce the CPU required to recalculate results. Shard the data across more nodes to reduce the memory footprint on each at the cost of increasing network bandwidth. In many cases these tradeoffs don't need to be baked deep in the application, they can be tweaked via relatively simple changes. They can be adjusted to tune the application for RAM size, or for the availability of network bandwidth.

Further Segue To Overlay Networks

There is a lot of effort being put into overlay networks for virtualized datacenters, to create an L2 network atop an L3 infrastructure. This allows the infrastructure to run as an L3 network, which we are pretty good at scaling and managing, while the service provided to the VMs behaves as an L2 network.

Yet once the packets are carried in IP tunnels they can, through the magic of routing, be carried across a WAN to another facility. The datacenter network can be transparently extended to include resources in several locations. Transparently, except for performance. The round trip time across a WAN will inevitably be longer than the LAN, the speed of light demands it. Even for geographically close facilities the bandwidth available over a WAN will be far less than the bandwidth available within a datacenter, perhaps orders of magnitude less. Application tuning parameters set based on the performance within a single datacenter will be horribly wrong across the WAN.

I've no doubt that people will do it anyway. We will see L2 overlay networks being carried across VPNs to link datacenters together transparently (except for performance). Like the OS schedulers suddenly finding themselves in a NUMA world, software infrastructure within the datacenter will find itself in a network where some links are more equal than others. As an industry, we'll spend a couple years figuring out whether that was a good idea or not.

footnote: this blog contains articles on a range of topics. If you want more posts like this, I suggest the Ethernet label.