It is an interesting time to be involved in datacenter networking. There have been announcements recently of two competing proposals for running virtual L2 networks as an overlay atop a underlying IP network, VXLAN and NVGRE. Supporting an L2 service is important for virtualized servers, which need to be able to move from one physical server to another without changing their IP address or interrupting the services they provide. Having written about VXLAN in a series of three posts, now it is time for NVGRE. Ivan Pepelnjak has already posted about it on IOShints, which I recommend reading.

NVGRE encapsulates L2 frames inside tunnels to carry them across an L3 network. As its name implies, it uses GRE tunneling. GRE has been around for a very long time, and is well supported by networking gear and analysis tools. An NVGRE Endpoint uses the Key field in the GRE header to hold the Tenant Network Identifier (TNI), a 24 bit space of virtual LANs.

The encapsulated packet has no Inner CRC. When VMs send packets to other VMs within a server they do not calculate a CRC, one is added by a physical NIC when the packet leaves the server. As the NVGRE Endpoint is likely to be a software component within the server, prior to hitting any NIC, the frames have no CRC. This is another case where even on L2 networks, the Ethernet CRC does not work the way our intuition would suggest.

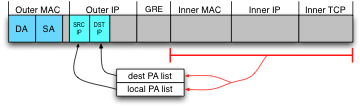

The NVGRE draft refers to IP addresses in the outer header as Provider Addresses, and the inner header as Customer Addresses. NVGRE can optionally also use an IP multicast group for each TNI to distribute L2 broadcast and multicast packets.

Not Quite Done

As befits its "draft" designation, a number of details in the NVGRE proposal are left to be determined in future iterations. One largish bit left unspecified is mapping of Customer Addresses to Provider. When an NVGRE Endpoint needs to send a packet to a remote VM, it must know the address of the remote NVGRE Endpoint. The mechanism to maintain this mapping is not yet defined, though it will be provisioned by a control function communicating with the Hypervisors and switches.

Optional Multicast?

The NVGRE draft calls out broadcast and multicast support as being optional, only if the network operator chooses to support it. To operate as a virtual Ethernet network a few broadcast protocols are essential, like ARP and IPv6 ND. Presumably if broadcast is not available, the NVGRE Endpoint would respond to these requests to its local VMs.

Yet I don't see how that can work in all cases. The NVGRE control plane can certainly know the Provider Address of all NVGRE Endpoints. It can know the MAC address of all guest VMs within the tenant network, because the Hypervisor provides the MAC address as part of the virtual hardware platform. There are notable exceptions where guest VMs use VRRP, or make up locally administered MAC addresses, but I'll ignore those for now.

I don't see how an NVGRE Endpoint can know all Customer IP Addresses. One of two things would have to happen:

- Require all customer VMs to obtain their IP from the provider. Even backend systems using private, internal addresses would have to get them from the datacenter operator so that NVGRE can know where they are.

- Implement a distributed learning function where NVGRE Endpoints watch for new IP addresses sent by their VMs and report them to all other Endpoints.

The current draft of NVGRE makes no mention of either such function, so we'll have to watch for future developments.

The earlier VL2 network also did not require multicast and handled ARP via a network-wide directory service. Many VL2 concepts made their way into NVGRE. So far as I understand it, VL2 assigned all IP addresses to VMs and could know where they were in the network.

Multipathing

An important topic for tunneling protocols is multipathing. When multiple paths are available to a destination, either LACP at L2 or ECMP at L3, the switches have to choose which link to use. It is important that packets on the same flow stay in order, as protocols like TCP use excessive reordering as an indication of congestion. Switches hash packet headers to select a link, so packets with the same headers will always choose the same link.

An important topic for tunneling protocols is multipathing. When multiple paths are available to a destination, either LACP at L2 or ECMP at L3, the switches have to choose which link to use. It is important that packets on the same flow stay in order, as protocols like TCP use excessive reordering as an indication of congestion. Switches hash packet headers to select a link, so packets with the same headers will always choose the same link.

Tunneling protocols have issues with this type of hashing: all packets in the tunnel have the same header. This limits them to a single link, and congests that one link for other traffic. Some switch chips implement extra support for common tunnels like GRE, to include the Inner header in the hash computation. NVGRE would benefit greatly from this support. Unfortunately, it is not universal amongst modern switches.

The NVGRE draft proposes that each NVGRE Endpoint have multiple Provider Addresses. The Endpoints can choose one of several source and destination IP addresses in the encapsulating IP header, to provide variance to spread load across LACP and ECMP links. The draft says that when the Endpoint has multiple PAs, each Customer Address will be provisioned to use one of them. In practice I suspect it would be better were the NVGRE Endpoint to hash the Inner headers to choose addresses, and distribute the load for each Customer Address across all links.

The NVGRE draft proposes that each NVGRE Endpoint have multiple Provider Addresses. The Endpoints can choose one of several source and destination IP addresses in the encapsulating IP header, to provide variance to spread load across LACP and ECMP links. The draft says that when the Endpoint has multiple PAs, each Customer Address will be provisioned to use one of them. In practice I suspect it would be better were the NVGRE Endpoint to hash the Inner headers to choose addresses, and distribute the load for each Customer Address across all links.

Using multiple IP addresses for load balancing is clever, but I can't easily predict how well it will work. The number of different flows the switches see will be relatively small. For example if each endpoint has four addresses, the total number of different header combinations between any two endpoints is sixteen. This is sixteen times better than having a single address each, but it is still not a lot. Unbalanced link utilization seems quite possible.

Aside: Deliberate Multipathing

The relatively limited variance in headers leads to an obvious next step: ensure the traffic will be balanced by predicting what the switch will do, and choose Provider IP addresses to optimize and ensure it is well balanced. In networking today we tend to solve problems by making the edges smarter.

The relatively limited variance in headers leads to an obvious next step: ensure the traffic will be balanced by predicting what the switch will do, and choose Provider IP addresses to optimize and ensure it is well balanced. In networking today we tend to solve problems by making the edges smarter.

The NVGRE draft says that selection of a Provider Address is provisioned to the Endpoint. Each Customer Address will be associated with exactly one Provider Address to use. I suspect that selection of Provider Addresses is expected to be done via an optimization mechanism like this, but I'm definitely speculating.

I'd caution that this is harder than it sounds. Switches use the ingress port as part of the hash calculation. That is, the same packet arriving on a different ingress port will choose a different egress link within the LACP/ECMP group. To predict behavior one needs a complete wiring diagram of the network. In the rather common case where several LACP/ECMP groups are traversed along the way to a destination, the link selected by each previous switch influences the hash computation of the next.

Misc Notes

- The NVGRE draft mentions keeping an MTU state per Endpoint, to avoid fragmentation. Details will be described in future drafts. NVGRE certainly benefits from a datacenter network with a larger MTU, but will not require it.

- VXLAN describes its overlay network as existing within a datacenter. NVGRE explicitly calls for spanning across wide area networks via VPNs, for example to connect a corporate datacenter to additional resources in a cloud provider. I'll have to cover this aspect in another post, this post is too long already.

Conclusion

Its quite difficult to draw a conclusion about NVGRE, as so much is still unspecified. There are two relatively crucial mapping functions which have yet to be described:

- When a VM wants to contact a remote Customer IP and sends an ARP Request, in the absence of multicast, how can the matching MAC address be known?

- When the NVGRE Endpoint is handed a frame destined to a remote Customer MAC, how does it find the Provider Address of the remote Endpoint?

So we'll wait and see.

footnote: this blog contains articles on a range of topics. If you want more posts like this, I suggest the Ethernet label.