This is the third and final article in a series about VXLAN. I recommend reading the first and second articles before this one. I have not been briefed by any of the companies involved, nor received any NDA information. These articles are written based on public statements and discussions available on the web.

Foreign Gateways

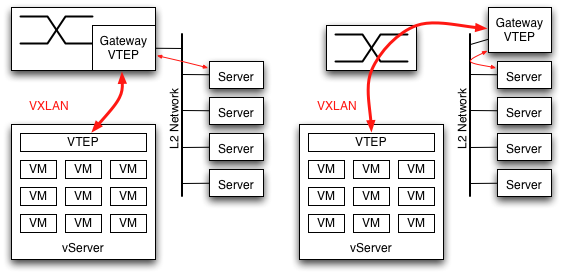

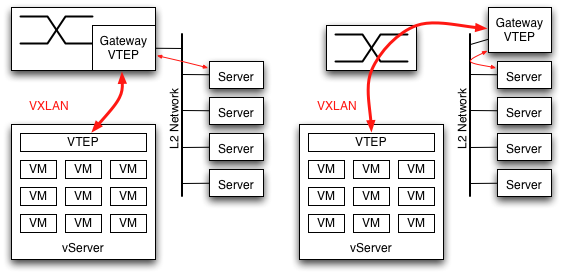

Though I've consistently described VXLAN communications as occurring between VMs, many datacenters have a mix of virtual servers with single-instance physical servers. Something has to provide the VTEP function for all nodes on the network, but it doesn't have to be the server itself. A Gateway function can bridge to physical L2 networks, and with representatives of several switch companies as authors of the RFC this seems likely to materialize within the networking gear itself. The Gateway can also be provided by a server sitting within the same L2 domain as the servers it handles.

Even if the datacenter consists entirely of VMs, a Gateway function is still needed in the switch. To communicate with the Internet (or anything else outside of their subnet) the VMs will ARP for their next hop router. This router has to have a VTEP.

Transition Strategy

I'm tempted to say there isn't a transition strategy. Thats a bit too harsh in that the Gateway function just described can serve as a proxy, but its not far from the mark. As described in the RFC, the VTEP assumes that all destination L2 addresses will be served by a remote VTEP somewhere. If the VTEP doesn't know the L3 address of the remote node to send to, it floods the packet to all VTEPs using multicast. There is no provision for direct L2 communication to nodes which have no VTEP. It is assumed that an existing installation of VMs on a VLAN will be taken out of service, and all nodes reconfigured to use VXLAN. VLANs can be converted individually, but there is no provision operation with a mixed set of VTEP-enabled and non-VTEP-enabled nodes on an existing VLAN.

I'm tempted to say there isn't a transition strategy. Thats a bit too harsh in that the Gateway function just described can serve as a proxy, but its not far from the mark. As described in the RFC, the VTEP assumes that all destination L2 addresses will be served by a remote VTEP somewhere. If the VTEP doesn't know the L3 address of the remote node to send to, it floods the packet to all VTEPs using multicast. There is no provision for direct L2 communication to nodes which have no VTEP. It is assumed that an existing installation of VMs on a VLAN will be taken out of service, and all nodes reconfigured to use VXLAN. VLANs can be converted individually, but there is no provision operation with a mixed set of VTEP-enabled and non-VTEP-enabled nodes on an existing VLAN.

For an existing datacenter which desires to avoid scheduling downtime for an entire VLAN, one transition strategy would use a VTEP Gateway as the first step. When the first server is upgraded to use VXLAN and have its own VTEP, all of its packets to other servers will go through this VTEP Gateway. As additional servers are upgraded they will begin communicating directly between VTEPs, and rely on the Gateway to maintain communication with the rest of their subnet.

Where would the Gateway function go? During the transition, which could be lengthy, the Gateway VTEP will be absolutely essential for operation. It shouldn't be a single point of failure, and this should trigger the network engineer's spidey sense about adding a new critical piece of infrastructure. It will need to be monitored, people will need to be trained in what to do if it fails, etc. Therefore it seems far more likely that customers will choose to upgrade their switches to include the VTEP Gateway function, so as not to add a new critical bit of infrastructure.

Controller to the Rescue?

What makes this transition strategy difficult to accept is that VMs have to be configured to be part of a VXLAN. They have to be assigned to a particular VNI, and that VNI has to be given an IP multicast address to use for flooding. Therefore something, somewhere knows the complete list of VMs which should be part of the VXLAN. In Rumsfeldian terms, there are only known unknown addresses and no unknown unknowns. That is, the VTEP can know the complete list of destination MAC addresses it is supposed to be able to reach via VXLAN. The only unknown is the L3 address of the remote VTEP. If the VTEP encounters a destination MAC address which it doesn't know about, it doesn't have to assume it is attached to a VTEP somewhere. It could know that some MAC addresses are reached directly, without VXLAN encapsulation.

What makes this transition strategy difficult to accept is that VMs have to be configured to be part of a VXLAN. They have to be assigned to a particular VNI, and that VNI has to be given an IP multicast address to use for flooding. Therefore something, somewhere knows the complete list of VMs which should be part of the VXLAN. In Rumsfeldian terms, there are only known unknown addresses and no unknown unknowns. That is, the VTEP can know the complete list of destination MAC addresses it is supposed to be able to reach via VXLAN. The only unknown is the L3 address of the remote VTEP. If the VTEP encounters a destination MAC address which it doesn't know about, it doesn't have to assume it is attached to a VTEP somewhere. It could know that some MAC addresses are reached directly, without VXLAN encapsulation.

The previous article in this series brought up the reliance on multicast for learning as an issue, and suggested that a VXLAN controller would be an important product to offer. That controller could also provide a better transition strategy, allowing VTEPs to know that some L2 addresses should be sent directly to the wire without a VXLAN tunnel. This doesn't make the controller part of the dataplane: it is only involved when stations are added or removed from the VXLAN. During normal forwarding, the controller is not involved.

It is safe to say that the transition strategy for existing, brownfield datacenter networks is the part of the VXLAN proposal which I like the least.

Other miscellaneous notes

VXLAN prepends 42 bytes of headers to the original packet. To avoid IP fragmentation the L3 network needs to handle a slightly larger frame size than standard Ethernet. Support for Jumbo frames is almost universal in networking gear at this point, this should not be an issue.

There is only a single multicast group per VNI. All broadcast and multicast frames in that VXLAN will be sent to that one IP multicast group and delivered to all VTEPs. The VTEP would likely run an IGMP Snooping function locally to determine whether to deliver multicast frames to its VMs. VXLAN as currently defined can't prune the delivery tree, all VTEPs must receive all frames. It would be nice to be able to prune delivery within the network, and not deliver to VTEPs which have no subscribing VMs. This would require multiple IP multicast groups per VNI, which would complicate the proposal.

Conclusion

I like the VXLAN proposal. I view the trend toward having enormous L2 networks in datacenters as disturbing, and see VXLAN as a way to give the VMs the network they want without tying it to the underlying physical infrastructure. It virtualizes the network to meet the needs of the virtual servers.

After beginning to publish these articles on VXLAN I became aware of another proposal, NVGRE. There appear to be some similarities, including the use of IP multicast to fan out L2 broadcast/multicast frames, and the two proposals even share an author in common. NVGRE uses GRE encapsulation instead of the UDP+VXLAN header, with multiple L2 addresses to provide load balancing across LACP/ECMP links. It will take a while to digest, but I expect to write some thoughts about NVGRE in the future.

Many thanks to Ken Duda, whose patient explanations of VXLAN on Google+ made this writeup possible.

footnote: this blog contains articles on a range of topics. If you want more posts like this, I suggest the Ethernet label.

I'm tempted to say there isn't a transition strategy. Thats a bit too harsh in that the Gateway function just described can serve as a proxy, but its not far from the mark. As described in

I'm tempted to say there isn't a transition strategy. Thats a bit too harsh in that the Gateway function just described can serve as a proxy, but its not far from the mark. As described in  What makes this transition strategy difficult to accept is that VMs have to be configured to be part of a VXLAN. They have to be assigned to a particular VNI, and that VNI has to be given an IP multicast address to use for flooding. Therefore something, somewhere knows the complete list of VMs which should be part of the VXLAN. In

What makes this transition strategy difficult to accept is that VMs have to be configured to be part of a VXLAN. They have to be assigned to a particular VNI, and that VNI has to be given an IP multicast address to use for flooding. Therefore something, somewhere knows the complete list of VMs which should be part of the VXLAN. In  The UDP header serves an interesting purpose, it isn't there to perform the multiplexing role UDP normally serves. When switches have multiple paths available to a destination, whether an L2 trunk or L3 multipathing, the specific link is chosen by hashing packet headers. Most switch hardware is quite limited in how it computes the hash: the outermost L2/L3/L4 headers. Some chips can examine the inner headers of long-established tunneling protocols like GRE/MAC-in-MAC/IP-in-IP. For a new protocol like VXLAN, it would take years for silicon support for the inner headers to become common.

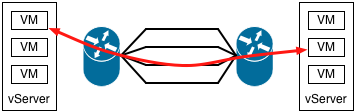

The UDP header serves an interesting purpose, it isn't there to perform the multiplexing role UDP normally serves. When switches have multiple paths available to a destination, whether an L2 trunk or L3 multipathing, the specific link is chosen by hashing packet headers. Most switch hardware is quite limited in how it computes the hash: the outermost L2/L3/L4 headers. Some chips can examine the inner headers of long-established tunneling protocols like GRE/MAC-in-MAC/IP-in-IP. For a new protocol like VXLAN, it would take years for silicon support for the inner headers to become common.

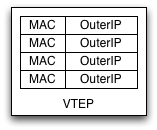

The VTEP examines the destination MAC address of frames it handles, looking up the IP address of the VTEP for that destination. This MAC:OuterIP mapping table is populated by learning, very much like an L2 switch discovers the port mappings for MAC addresses. When a VM wishes to communicate with another VM it generally first sends a broadcast ARP, which its VTEP will send to the multicast group for its VNI. All of the other VTEPs will learn the Inner MAC address of the sending VM and Outer IP address of its VTEP from this packet. The destination VM will respond to the ARP via a unicast message back to the sender, which allows the original VTEP to learn the destination mapping as well.

The VTEP examines the destination MAC address of frames it handles, looking up the IP address of the VTEP for that destination. This MAC:OuterIP mapping table is populated by learning, very much like an L2 switch discovers the port mappings for MAC addresses. When a VM wishes to communicate with another VM it generally first sends a broadcast ARP, which its VTEP will send to the multicast group for its VNI. All of the other VTEPs will learn the Inner MAC address of the sending VM and Outer IP address of its VTEP from this packet. The destination VM will respond to the ARP via a unicast message back to the sender, which allows the original VTEP to learn the destination mapping as well. Though it is appealing to let VTEPs track each other automatically using multicast and learning, I suspect beyond a certain scale of network that isn't going to work very well. Multicast frames are not reliably delivered, and because they fan out to all nodes they tend to become ever less reliable as the number of nodes increases. The

Though it is appealing to let VTEPs track each other automatically using multicast and learning, I suspect beyond a certain scale of network that isn't going to work very well. Multicast frames are not reliably delivered, and because they fan out to all nodes they tend to become ever less reliable as the number of nodes increases. The

Not quite two years ago in this space

Not quite two years ago in this space