It started, as things often do nowadays, with a tweet. As part of a discussion of networking-fu I mentioned ProxyARP, and that it was no longer used. Ivan Pepelnjak corrected that it did still have a use. He wrote about it last year. I've tried to understand it, and wrote this post to be able to come back to later to remind myself.

Wayback Machine to 1985

ifconfig eth0 10.0.0.1 netmask 255.255.255.0

Thats it, right? You always configure an IP address plus subnet mask. The host will ARP for addresses on its subnet, and send to a router for addresses outside its subnet.

Yet it wasn't always that way. Subnet masks were retrofitted into IPv4 in the early 1980s. Before that there were no subnets. The host would AND the destination address with a class A/B/C mask, and send to the ARPANet for anything outside of its own network. Yes, this means a class A network would expect to have all 16 million hosts on a single Ethernet segment. This seems ludicrous now, but until the early 1980s it wasn't a real-world problem. There just weren't that many hosts at a site. The IPv4 address was widely perceived as being so large as to be infinite, only a small number of addresses would actually be used.

Aside: in the 1980s the 10.0.0.1 address had a different use than it does now. Back then it was the ARPAnet. It was the way you would send packets around the world. When ARPAnet was decommissioned, the 10.x.x.x address was made available for its modern for non-globally routed hosts.

There was a period of several years where subnet masks were gradually implemented by the operating systems of the day. My recollection is that BSD 4.0 did not implement subnets while 4.1 did, but this is probably wrong. In any case, once an organization decided to start using subnets it would need a way to deal with stragglers. The solution was Proxy ARP.

Its easy to detect a host which isn't using subnets: it will ARP for addresses which it shouldn't. The router examines incoming ARPs and, if off-segment, responds with its own MAC address. In effect the router will impersonate the remote system, so that hosts which don't implement subnet masking could still function in a subnetted world. The load on the router was unfortunate, but worthwhile.

Proxy ARP Today

That was decades ago. Yet Proxy ARP is still implemented in modern network equipment, and has some modern uses. One such case is in Ethernet access networks.

Consider a network using traditional L3 routing: you give each subscriber an IP address on their own IP subnet. You need to have a router address on the same subnet, and you need a broadcast address. Needing 3 IPs per subscriber means a /30. Thats 4 IP addresses allocated per customer.

Consider a network using traditional L3 routing: you give each subscriber an IP address on their own IP subnet. You need to have a router address on the same subnet, and you need a broadcast address. Needing 3 IPs per subscriber means a /30. Thats 4 IP addresses allocated per customer.

There are some real advantages to giving each subscriber a separate subnet and requiring that all communication go through a router. Security is one, not allowing malware to spread from one subscriber to another without the service provider seeing it. Yet burning 4 IP addresses for each customer is painful.

To improve the utilization of IP addresses, we might configure the access gear to switch at L2 between subscribers on the same box. Now we only allocate one IP address per subscriber instead of four, but we expose all other subscribers in that L2 domain to potentially malicious traffic which the service provider cannot police.

We also end up with an inflexible network topology: it becomes arduous to change subnet allocations, because subscriber machines know how big the subnets are. As DHCP leases expire the customer systems should eventually learn of a new mask, but people sometimes do weird things with their configuration.

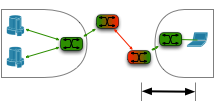

A final option relies on proxy ARP to decouple the subscriber's notion of the netmask from the real network topology. I'm basing this diagram on a comment by troyand on ioshints.com. Each subscriber is allocated a vlan by the distribution switch. The vlans themselves are unnumbered: no IP address. The subscriber is handed an IP address and netmask by DHCP, but the subscriber's netmask doesn't correspond to the actual network topology. They might be given a /16, but that doesn't mean sixty four thousand other subscribers are on the segment with them. The router uses Proxy ARP to catch attempts by the subscriber to communicate with nearby addresses.

This lets service providers get the best of both worlds: communication between subscribers goes through the service provider's equipment so it can enforce security policies, but only one IPv4 address per subscriber.

My current project relies on a large number of

My current project relies on a large number of

In a typical datacenter, the servers sit behind a load balancer. The simplest such equipment distributes sessions without modifying the packets, but the market demands

In a typical datacenter, the servers sit behind a load balancer. The simplest such equipment distributes sessions without modifying the packets, but the market demands  High speed wide area networks are expensive. If a one-time purchase of a magic box at each end can reduce the monthly cost of the connection between them, then there is an economic benefit to buying the magic box.

High speed wide area networks are expensive. If a one-time purchase of a magic box at each end can reduce the monthly cost of the connection between them, then there is an economic benefit to buying the magic box.  Organizations set up HTTP proxies to enforce security policies, cache content, and a host of other reasons.

Organizations set up HTTP proxies to enforce security policies, cache content, and a host of other reasons.