Yesterday at GlueCon, Jud Valeski of Gnip gave a talk on "High-Volume, Real-time Data Stream Handling" where he discussed some characteristics of the Gnip datafeed. I wasn't at the talk, but the snippets tweeted by Kevin Marks were fascinating:

- There are 155M tweets/day. With metadata each tweet averages ~2500 bytes, so it works out to about 35 Mbps.

- Data is provided as a single HTTP stream at 35 Mbps.

- Servers and routers are not optimized for handling a 35 Mbps HTTP stream, and tuning has been necessary.

35 Megabits per second may not sound like much when the links are measured in Gigabits, so I thought I'd take a guess at the kinds of issues being seen.

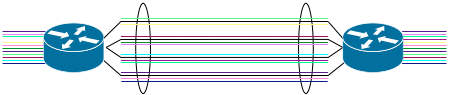

![]() For several decades the network link speed has roughly doubled every 18 months, pacing Moore's law on the CPU. Silicon advancement for signal processing have certainly helped with that, but a host of photonic advances have also been required. As with CPUs, we've been further increasing network capacity via parallelism. Multiple links are run, with traffic load spread across all links in the group. This means we've increased aggregate performance faster than the individual connections.

For several decades the network link speed has roughly doubled every 18 months, pacing Moore's law on the CPU. Silicon advancement for signal processing have certainly helped with that, but a host of photonic advances have also been required. As with CPUs, we've been further increasing network capacity via parallelism. Multiple links are run, with traffic load spread across all links in the group. This means we've increased aggregate performance faster than the individual connections.

One might think that distributing traffic across multiple parallel links would be simple: send the first packet to the first link, the second packet to the second link, etc. This turns out not to work very well, because packets are variable size. If packet #1 is large and #2 is small, packet #2 can pop out of its link before packet #1. TCP interprets packet reordering as a sign of network congestion. If packets 1 and 2 are part of the same TCP flow, that flow will slow down. Therefore link aggregation has to be aware of flows, and keep packets from the same flow in order even as separate flows are reordered.

Aside: lots of networking gear actually does have a mode for sending packet #1 to link 1 and #2 to link 2. It is cynically referred to as "magazine mode," as in: "In Network Computing's magazine article we'll be able to show 100% utilization on all links." In real networks, magazine mode is rarely useful.

Hashing

Traffic is distributed across parallel links via hashing. One or more of the MAC address, IP address, or TCP/UDP port numbers will be hashed to a index and used to select the link. All packets from the same flow will hash to the same value and choose the same link. Given a large number of flows, the distribution tends to be pretty good.

Traffic is distributed across parallel links via hashing. One or more of the MAC address, IP address, or TCP/UDP port numbers will be hashed to a index and used to select the link. All packets from the same flow will hash to the same value and choose the same link. Given a large number of flows, the distribution tends to be pretty good.

The distribution doesn't react to load. If one link becomes overloaded, the load isn't redistributed to even it out. It can't be: the switch isn't keeping track of the flows it has seen and which link they should go to, it just hashes each packet as it arrives. The presence of a few very high bandwidth flows causes the load to become unbalanced.

The distribution doesn't react to load. If one link becomes overloaded, the load isn't redistributed to even it out. It can't be: the switch isn't keeping track of the flows it has seen and which link they should go to, it just hashes each packet as it arrives. The presence of a few very high bandwidth flows causes the load to become unbalanced.

35 Megabits per second is only a fraction of the capacity of an individual link, but that one flow is by no means the only traffic on the net. Once delivered, the tweets have to be acted upon in some fashion and that results in additional traffic on the network. It would be easy to end up with a number of flows at 35 Mbps each.

IRQs

Networks have increased aggregate capacity via parallel links. Servers have increased aggregate capacity via parallel CPUs, and the same issue of keeping packets in order arises. Server NICs distribute the IRQ load across CPUs by hashing, and the same reordering issue arises. A single flow has to go to a single CPU, creating a hotspot in the interrupt handling. The software can immediately hand off processing to other CPUs, but it will be a bottleneck.

Peak vs Sustained

Right now, the unbalanced load should be manageable. The tweetstream is only 35 Mbps, 1/30th the capacity of a network link and 1/10th that of a single CPU core. There is currently some headroom, but there are two trends which will make unbalancing more of a problem in the future:

- Tweet volume triples in a year.

- 35 Mbps is just an average.

The volume of tweets isn't constant, it suddenly increases in response to events. In March 2011 the record number of tweets per second was 6,939, which works out to 138 Megabits per second. In a year, the peak TPS can be expected to be 416 Mbps. In two years it will be over a Gigabit.

Hardware advances won't keep up with that. At peak times its already causing some heartburn, and it will get a little worse every day. The load needs to be better distributed.