This is the fourth and final article in a series exploring the Juniper QFabric. Earlier articles provided an overview, a discussion of link speed, and musings on flow control. Juniper says the QFabric should not be thought of as a network but as one large distributed switch. This series examines techniques used in modular switch designs, and tries to apply them to the QFabric. This article attempts to cover a few loose ends, and wraps up the series.

As with previous days, the flow control post sparked an interesting discussion on Google+.

Whither Protocols?

For a number of years switch and router manufacturers competed on protocol support, implementing various extensions to OSPF/BGP/SpanningTree/etc in their software. QFabric is almost completely silent about protocols. In part this is a marketing philosophy: positioning the QFabric as a distributed switch instead of a network means that the protocols running within the fabric are an implementation detail, not something to talk about. I don't know what protocols are run between the nodes of the QFabric, but I'm sure its not Spanning Tree and OSPF.

For a number of years switch and router manufacturers competed on protocol support, implementing various extensions to OSPF/BGP/SpanningTree/etc in their software. QFabric is almost completely silent about protocols. In part this is a marketing philosophy: positioning the QFabric as a distributed switch instead of a network means that the protocols running within the fabric are an implementation detail, not something to talk about. I don't know what protocols are run between the nodes of the QFabric, but I'm sure its not Spanning Tree and OSPF.

Yet QFabric will need to connect to other network elements at its edge, where the datacenter connects to the outside world. Presumably the routing protocols it needs are implemented in the QF/Director and piped over to whichever switch ports connect to the rest of the network. If there are multiple peering points, they need to communicate with the same entity and a common routing information base.

Flooding Frowned Upon

The edge Nodes have an L2 table holding 96K MAC addresses. This reinforces the notion that switching decisions are made at the ingress edge, every Node can know how to reach destination MAC addresses at every port. There are a few options for distributing MAC address information to all of the nodes, but I suspect that flooding unknown addresses to all ports is not the preferred mechanism. If flooding is allowed at all, it would be carefully controlled.

Much of modern datacenter design revolves around virtualization. The VMWare vCenter (or equivalent) is a single, authoritative source of topology information for virtual servers. By hooking to the VM management system, the QFabric Director could know the expected port and VLAN for each server MAC address. The Node L2 tables could be pre-populated accordingly.

By hooking to the VM management console QFabric could also coordinate VLANs, flow control settings, and other network settings with the virtual switches running in software.

NetOps Force Multiplier

Where previously network engineers would be configuring dozens of switches, QFabric now proposes to manage a single distributed switch. Done well, this should be a substantial time saver. There will of course be cases where the abstraction leaks and the individual Nodes have to be dealt with. The failure modes in a distributed switch are simply different. Its unlikely that a single line card within a chassis will unexpectedly lose power, but its almost certain that Nodes occasionally will. Nonetheless, the cost to operate QFabric seems promising.

Conclusion

QFabric is an impressive piece of work, clearly the result of several years effort. Though the Interconnects use merchant silicon, Juniper almost certainly started working with the manufacturer at the start of the project to ensure the chip would meet their needs.

The most interesting part of QFabric is its flow control mechanism, for which Juniper has made some pretty stunning claims. A flow control mechanism with fairness, no packet loss, and quick reaction to changes over such a large topology is an impressive feat.

footnote: this blog contains articles on a range of topics. If you want more posts like this, I suggest the Ethernet label.

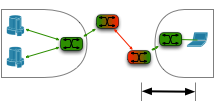

The flow control is for the whole link. For a switch with multiple downstream ports, there is no way to signal back to the sender that only some of the ports are congested. In this diagram, a single congested port requires the upstream to be flow controlled, even though the other port could accept more packets. Ethernet flow control suffers from head of line blocking, a single congested port will choke off traffic to other uncongested ports.

The flow control is for the whole link. For a switch with multiple downstream ports, there is no way to signal back to the sender that only some of the ports are congested. In this diagram, a single congested port requires the upstream to be flow controlled, even though the other port could accept more packets. Ethernet flow control suffers from head of line blocking, a single congested port will choke off traffic to other uncongested ports. A common spec for switch silicon in the 1Gbps generation is 48 x 1 Gbps ports plus 4 x 10 Gbps. Depending on the product requirements, 10 Gbps ports can be used for server attachment or as uplinks to build into a larger switch. At first glance the chassis application appears to be somewhat oversubscribed, with 48 Gbs of downlink but only 40 Gbps of uplink. In reality, when used in a chassis the uplink ports will run at 12.5 Gbps to get 50 Gbps of uplink bandwidth.

A common spec for switch silicon in the 1Gbps generation is 48 x 1 Gbps ports plus 4 x 10 Gbps. Depending on the product requirements, 10 Gbps ports can be used for server attachment or as uplinks to build into a larger switch. At first glance the chassis application appears to be somewhat oversubscribed, with 48 Gbs of downlink but only 40 Gbps of uplink. In reality, when used in a chassis the uplink ports will run at 12.5 Gbps to get 50 Gbps of uplink bandwidth. QFabric consists of edge nodes wired to two or four extremely large

QFabric consists of edge nodes wired to two or four extremely large  Modular Ethernet switches have line cards which can switch between ports on the card, with fabric cards (also commonly called supervisory modules, route modules, or MSMs) between line cards. One might assume that each level of switching would function like we expect Ethernet switches to work, forwarding based on the L2 or L3 destination address. There are a number of reasons why this doesn't work very well, most troublesome of which are the consistency issues. There is a delay between when a packet is processed by the ingress line card and the fabric, and between the fabric and egress. The L2 and L3 tables can change between the time a packet hits one level of switching and the next, and its very, very hard to design a robust switching platform with so many corner cases and race conditions to worry about.

Modular Ethernet switches have line cards which can switch between ports on the card, with fabric cards (also commonly called supervisory modules, route modules, or MSMs) between line cards. One might assume that each level of switching would function like we expect Ethernet switches to work, forwarding based on the L2 or L3 destination address. There are a number of reasons why this doesn't work very well, most troublesome of which are the consistency issues. There is a delay between when a packet is processed by the ingress line card and the fabric, and between the fabric and egress. The L2 and L3 tables can change between the time a packet hits one level of switching and the next, and its very, very hard to design a robust switching platform with so many corner cases and race conditions to worry about. Therefore all Ethernet switch silicon I know of relies on control headers prepended to the packet. A forwarding decision is made at exactly one place in the system, generally either the ingress line card or the central fabric cards. The forwarding decision includes any rewrites or tunnel encapsulations to be done, and determines the egress port. A header is prepended to the packet for the rest of its trip through the chassis, telling all remaining switch chips what to do with it. To avoid impacting the forwarding rate, these headers replace part of the

Therefore all Ethernet switch silicon I know of relies on control headers prepended to the packet. A forwarding decision is made at exactly one place in the system, generally either the ingress line card or the central fabric cards. The forwarding decision includes any rewrites or tunnel encapsulations to be done, and determines the egress port. A header is prepended to the packet for the rest of its trip through the chassis, telling all remaining switch chips what to do with it. To avoid impacting the forwarding rate, these headers replace part of the  Generally the chips are configured to use these prepended control headers only on backplane links, and drop the header before the packet leaves the chassis. There are some exceptions where control headers are carried over external links to another box. Several companies sell variations on the

Generally the chips are configured to use these prepended control headers only on backplane links, and drop the header before the packet leaves the chassis. There are some exceptions where control headers are carried over external links to another box. Several companies sell variations on the  Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the

Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the  Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line.

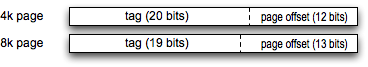

Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line. Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

Consider a network using traditional L3 routing: you give each subscriber an IP address on their own IP subnet. You need to have a router address on the same subnet, and you need a broadcast address. Needing 3 IPs per subscriber means a /30. Thats 4 IP addresses allocated per customer.

Consider a network using traditional L3 routing: you give each subscriber an IP address on their own IP subnet. You need to have a router address on the same subnet, and you need a broadcast address. Needing 3 IPs per subscriber means a /30. Thats 4 IP addresses allocated per customer.

My current project relies on a large number of

My current project relies on a large number of

In a typical datacenter, the servers sit behind a load balancer. The simplest such equipment distributes sessions without modifying the packets, but the market demands

In a typical datacenter, the servers sit behind a load balancer. The simplest such equipment distributes sessions without modifying the packets, but the market demands  High speed wide area networks are expensive. If a one-time purchase of a magic box at each end can reduce the monthly cost of the connection between them, then there is an economic benefit to buying the magic box.

High speed wide area networks are expensive. If a one-time purchase of a magic box at each end can reduce the monthly cost of the connection between them, then there is an economic benefit to buying the magic box.  Organizations set up HTTP proxies to enforce security policies, cache content, and a host of other reasons.

Organizations set up HTTP proxies to enforce security policies, cache content, and a host of other reasons.