In software development sometimes you spend time on an implementation which you are unreasonably proud of, but ultimately decide not to use in the product. This is one such story.

I needed to retrieve information from an attached disk, such as its model and serial number. There are commands which can do this, like hdparm, sdparm, and smartctl, but initially I tried to avoid building in a dependency on any such tools by interrogating it from the hard drive directly. In pure Python.

Snicker all you want, it did work. My first implementation used an older Linux API to retrieve this information, the HDIO_GET_IDENTITY ioctl. This ioctl maps more or less directly to an ATA IDENTIFY or SCSI INQUIRY from the drive. The implementation uses the Python struct module to define the data structure sent along with the ioctl.

def GetDriveId(dev):

"""Return information from interrogating the drive.

This routine issues a HDIO_GET_IDENTITY ioctl to a block device,

which only root can do.

Args:

dev: name of the device, such as 'sda' or '/dev/sda'

Returns:

(serial_number, fw_version, model) as strings

"""

# from /usr/include/linux/hdreg.h, struct hd_driveid

# 10H = misc stuff, mostly deprecated

# 20s = serial_no

# 3H = misc stuff

# 8s = fw_rev

# 40s = model

# ... plus a bunch more stuff we don't care about.

struct_hd_driveid = '@ 10H 20s 3H 8s 40s'

HDIO_GET_IDENTITY = 0x030d

if dev[0] != '/':

dev = '/dev/' + dev

with open(dev, 'r') as fd:

buf = fcntl.ioctl(fd, HDIO_GET_IDENTITY, ' ' * 512)

fields = struct.unpack_from(struct_hd_driveid, buf)

serial_no = fields[10].strip()

fw_rev = fields[14].strip()

model = fields[15].strip()

return (serial_no, fw_rev, model)

No no wait, stop snickering, it does work! It has to run as root, which is one reason why I eventually abandoned this approach.

$ sudo python hdio.py

('5RY0N6BD', '3.ADA', 'ST3250310AS')

HDIO_GET_IDENTITY is deprecated in Linux 2.6, and logs a message saying that sg_io should be used instead. sg_io is an API to send SCSI commands to a device. sg_io also didn't require my entire Python process to run as root, I'd "only" have to give it CAP_SYS_RAWIO. So of course I changed the implementation... still in Python. Stop snickering.

class AtaCmd(ctypes.Structure):

"""ATA Command Pass-Through

http://www.t10.org/ftp/t10/document.04/04-262r8.pdf"""

_fields_ = [

('opcode', ctypes.c_ubyte),

('protocol', ctypes.c_ubyte),

('flags', ctypes.c_ubyte),

('features', ctypes.c_ubyte),

('sector_count', ctypes.c_ubyte),

('lba_low', ctypes.c_ubyte),

('lba_mid', ctypes.c_ubyte),

('lba_high', ctypes.c_ubyte),

('device', ctypes.c_ubyte),

('command', ctypes.c_ubyte),

('reserved', ctypes.c_ubyte),

('control', ctypes.c_ubyte) ]

class SgioHdr(ctypes.Structure):

"""<scsi/sg.h> sg_io_hdr_t."""

_fields_ = [

('interface_id', ctypes.c_int),

('dxfer_direction', ctypes.c_int),

('cmd_len', ctypes.c_ubyte),

('mx_sb_len', ctypes.c_ubyte),

('iovec_count', ctypes.c_ushort),

('dxfer_len', ctypes.c_uint),

('dxferp', ctypes.c_void_p),

('cmdp', ctypes.c_void_p),

('sbp', ctypes.c_void_p),

('timeout', ctypes.c_uint),

('flags', ctypes.c_uint),

('pack_id', ctypes.c_int),

('usr_ptr', ctypes.c_void_p),

('status', ctypes.c_ubyte),

('masked_status', ctypes.c_ubyte),

('msg_status', ctypes.c_ubyte),

('sb_len_wr', ctypes.c_ubyte),

('host_status', ctypes.c_ushort),

('driver_status', ctypes.c_ushort),

('resid', ctypes.c_int),

('duration', ctypes.c_uint),

('info', ctypes.c_uint)]

def SwapString(str):

"""Swap 16 bit words within a string.

String data from an ATA IDENTIFY appears byteswapped, even on little-endian

achitectures. I don't know why. Other disk utilities I've looked at also

byte-swap strings, and contain comments that this needs to be done on all

platforms not just big-endian ones. So... yeah.

"""

s = []

for x in range(0, len(str) - 1, 2):

s.append(str[x+1])

s.append(str[x])

return ''.join(s).strip()

def GetDriveIdSgIo(dev):

"""Return information from interrogating the drive.

This routine issues a SG_IO ioctl to a block device, which

requires either root privileges or the CAP_SYS_RAWIO capability.

Args:

dev: name of the device, such as 'sda' or '/dev/sda'

Returns:

(serial_number, fw_version, model) as strings

"""

if dev[0] != '/':

dev = '/dev/' + dev

ata_cmd = AtaCmd(opcode=0xa1, # ATA PASS-THROUGH (12)

protocol=4<<1, # PIO Data-In

# flags field

# OFF_LINE = 0 (0 seconds offline)

# CK_COND = 1 (copy sense data in response)

# T_DIR = 1 (transfer from the ATA device)

# BYT_BLOK = 1 (length is in blocks, not bytes)

# T_LENGTH = 2 (transfer length in the SECTOR_COUNT field)

flags=0x2e,

features=0, sector_count=0,

lba_low=0, lba_mid=0, lba_high=0,

device=0,

command=0xec, # IDENTIFY

reserved=0, control=0)

ASCII_S = 83

SG_DXFER_FROM_DEV = -3

sense = ctypes.c_buffer(64)

identify = ctypes.c_buffer(512)

sgio = SgioHdr(interface_id=ASCII_S, dxfer_direction=SG_DXFER_FROM_DEV,

cmd_len=ctypes.sizeof(ata_cmd),

mx_sb_len=ctypes.sizeof(sense), iovec_count=0,

dxfer_len=ctypes.sizeof(identify),

dxferp=ctypes.cast(identify, ctypes.c_void_p),

cmdp=ctypes.addressof(ata_cmd),

sbp=ctypes.cast(sense, ctypes.c_void_p), timeout=3000,

flags=0, pack_id=0, usr_ptr=None, status=0, masked_status=0,

msg_status=0, sb_len_wr=0, host_status=0, driver_status=0,

resid=0, duration=0, info=0)

SG_IO = 0x2285 # <scsi/sg.h>

with open(dev, 'r') as fd:

if fcntl.ioctl(fd, SG_IO, ctypes.addressof(sgio)) != 0:

print "fcntl failed"

return None

if ord(sense[0]) != 0x72 or ord(sense[8]) != 0x9 or ord(sense[9]) != 0xc:

return None

# IDENTIFY format as defined on pg 91 of

# http://t13.org/Documents/UploadedDocuments/docs2006/D1699r3f-ATA8-ACS.pdf

serial_no = SwapString(identify[20:40])

fw_rev = SwapString(identify[46:53])

model = SwapString(identify[54:93])

return (serial_no, fw_rev, model)

For the unbelievers out there, this one works too.

$ sudo python sgio.py

('5RY0N6BD', '3.ADA', 'ST3250310AS')

So, there you go. HDIO_GET_IDENTITY and SG_IO implemented in pure Python. They do work, but in the process of working on this and reading existing code it became clear that low level ATA handling is fraught with peril. The disk industry has been iterating this interface for decades, and there is a ton of gear out there that made questionable choices in how to interpret the spec. Most of the code in existing utilities is not to implement the base operations but instead to handle the quirks from various manufacturers. I decided that I didn't want to go down that path, and will instead rely on forking sdparm and smartctl as needed.

I'll just leave this post here for search engines to find. I'm sure there is a ton of demand for this information.

Stop snickering.

Binary encoding of protocol headers is now passé. Therefore our new IP option is ASCII encoded, which also allows us to avoid the discussion about how Little Endian CPUs have won and all headers should be LE instead of BE. The content of the option is the destination IP address, followed by the '@' sign, followed by the decimal Autonomous System Number where the recipient can be reached. For example, a destination of 4.4.4.4 within the Level 3 network space would be "4.4.4.4@3356" using Level3's primary ASN of 3356.

Binary encoding of protocol headers is now passé. Therefore our new IP option is ASCII encoded, which also allows us to avoid the discussion about how Little Endian CPUs have won and all headers should be LE instead of BE. The content of the option is the destination IP address, followed by the '@' sign, followed by the decimal Autonomous System Number where the recipient can be reached. For example, a destination of 4.4.4.4 within the Level 3 network space would be "4.4.4.4@3356" using Level3's primary ASN of 3356. We know the story by now: by selling chips into products from multiple networking companies, commodity chips sell in large volume and benefit from larger discounts. This is a compelling factor in the low end of the switching market, where margins are thin and a primary selling point is price.

We know the story by now: by selling chips into products from multiple networking companies, commodity chips sell in large volume and benefit from larger discounts. This is a compelling factor in the low end of the switching market, where margins are thin and a primary selling point is price. Say it costs $10 million to design a chipset for a high end switch, and the resulting set of silicon costs $500 in the expected volumes. If that high end switch sells 10,000 units in its first year then the NRE cost for developing it amounts to $1,000 per unit, double the cost of buying the chip itself. The longer the model remains in production the more its cost can be amortized... but the company has to pay the complete cost to develop the silicon before the first unit is sold.

Say it costs $10 million to design a chipset for a high end switch, and the resulting set of silicon costs $500 in the expected volumes. If that high end switch sells 10,000 units in its first year then the NRE cost for developing it amounts to $1,000 per unit, double the cost of buying the chip itself. The longer the model remains in production the more its cost can be amortized... but the company has to pay the complete cost to develop the silicon before the first unit is sold. The merchant silicon vendors of the world can dedicate more ASIC engineers to their projects. This isn't as big a win as it sounds: tripling the size of the design team does not result in a chip with 3x the features or in 1/3rd the time. As with software projects (see The Mythical Man Month), the increasing coordination overhead of a larger team results in steeply diminishing returns.

The merchant silicon vendors of the world can dedicate more ASIC engineers to their projects. This isn't as big a win as it sounds: tripling the size of the design team does not result in a chip with 3x the features or in 1/3rd the time. As with software projects (see The Mythical Man Month), the increasing coordination overhead of a larger team results in steeply diminishing returns.

Setting up the

Setting up the  Much of the publisher interface concerns formatting and presentation of articles. RSS feeds generally require significant work on the formatting to look reasonable, a service performed by Feedburner and by tools like Flipboard and Google Currents. Nonetheless, I don't think the formatting is the main point, presentation is a means to an end. RSS is a reasonable transport protocol, but people have pressed it into service as the supplier of presentation and layout as well by wrapping a UI around it. Its not very good at it. Publishing tools have to expend effort on presentation and layout to make it useable.

Much of the publisher interface concerns formatting and presentation of articles. RSS feeds generally require significant work on the formatting to look reasonable, a service performed by Feedburner and by tools like Flipboard and Google Currents. Nonetheless, I don't think the formatting is the main point, presentation is a means to an end. RSS is a reasonable transport protocol, but people have pressed it into service as the supplier of presentation and layout as well by wrapping a UI around it. Its not very good at it. Publishing tools have to expend effort on presentation and layout to make it useable. Lets consider a typical processing path for sending a packet in a Unix system in the early 1990s:

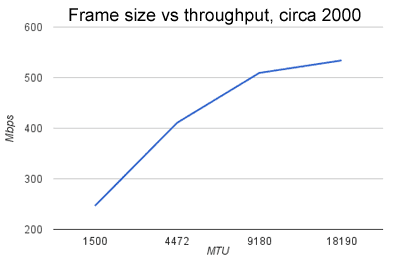

Lets consider a typical processing path for sending a packet in a Unix system in the early 1990s: I have data on packet size versus throughput in this timeframe, collected in the last months of 2000. It was gathered for a presentation at

I have data on packet size versus throughput in this timeframe, collected in the last months of 2000. It was gathered for a presentation at  Large Segment Offload (LSO), referred to as TCP Segmentation Offload (TSO) in Linux circles, is a technique to copy a large chunk of data from the application process and hand it as-is to the NIC. The protocol stack generates a single set of Ethernet+TCP+IP header to use as a template, and the NIC handles the details of incrementing the sequence number and calculating fresh checksums for a new header prepended to each packet. Chunks of 32K and 64K are common, so the NIC transmits 21 or 42 TCP segments without further intervention from the protocol stack.

Large Segment Offload (LSO), referred to as TCP Segmentation Offload (TSO) in Linux circles, is a technique to copy a large chunk of data from the application process and hand it as-is to the NIC. The protocol stack generates a single set of Ethernet+TCP+IP header to use as a template, and the NIC handles the details of incrementing the sequence number and calculating fresh checksums for a new header prepended to each packet. Chunks of 32K and 64K are common, so the NIC transmits 21 or 42 TCP segments without further intervention from the protocol stack. QFabric consists of Nodes at the edges wired to large Interconnect switches in the core. The whole collection is monitored and managed by out of band Directors. Juniper emphasizes that the QFabric should be thought of as a single distributed switch, not as a network of individual switches. The entire QFabric is managed as one entity.

QFabric consists of Nodes at the edges wired to large Interconnect switches in the core. The whole collection is monitored and managed by out of band Directors. Juniper emphasizes that the QFabric should be thought of as a single distributed switch, not as a network of individual switches. The entire QFabric is managed as one entity. The fundamental distinction between QFabric and conventional switches is in the forwarding decision. In a conventional switch topology each layer of switching looks at the L2/L3 headers to figure out what to do. The edge switch sends the packet to the distribution switch, which examines the headers again before sending the packet on towards the core (which examines the headers again). QFabric does not work this way.

The fundamental distinction between QFabric and conventional switches is in the forwarding decision. In a conventional switch topology each layer of switching looks at the L2/L3 headers to figure out what to do. The edge switch sends the packet to the distribution switch, which examines the headers again before sending the packet on towards the core (which examines the headers again). QFabric does not work this way.  I don't know the answer, but I suspect it has to do with Link Aggregation (LAG). Server Node Groups allow a LAG to be configured using ports spanning the two Nodes. In a chassis switch, LAG is handled by the ingress chip. It looks up the destination address to find the destination port. Every chip knows the membership of all LAGs in the chassis. The ingress chip computes a hash of the packet to pick which LAG member port to send the packet to. This is how LAG member ports can be on different line cards, the ingress port sends it to the correct card.

I don't know the answer, but I suspect it has to do with Link Aggregation (LAG). Server Node Groups allow a LAG to be configured using ports spanning the two Nodes. In a chassis switch, LAG is handled by the ingress chip. It looks up the destination address to find the destination port. Every chip knows the membership of all LAGs in the chassis. The ingress chip computes a hash of the packet to pick which LAG member port to send the packet to. This is how LAG member ports can be on different line cards, the ingress port sends it to the correct card. The downside of implementing LAG at ingress is that every chip has to know the membership of all LAGs in the system. Whenever a LAG member port goes down, all chips have to be updated to stop using it. With QFabric, where ingress chips are distributed across a network and the largest fabric could have thousands of server LAG connections, updating all of the Nodes whenever a link goes down could take a really long time. LAG failure is supposed to be quick, with minimal packet loss when a link fails. Therefore I wonder if Juniper has implemented LAG a bit differently, perhaps by handling member port selection in the Interconnect, in order to minimize the time to handle a member port failure.

The downside of implementing LAG at ingress is that every chip has to know the membership of all LAGs in the system. Whenever a LAG member port goes down, all chips have to be updated to stop using it. With QFabric, where ingress chips are distributed across a network and the largest fabric could have thousands of server LAG connections, updating all of the Nodes whenever a link goes down could take a really long time. LAG failure is supposed to be quick, with minimal packet loss when a link fails. Therefore I wonder if Juniper has implemented LAG a bit differently, perhaps by handling member port selection in the Interconnect, in order to minimize the time to handle a member port failure. Until quite recently these systems had to be constructed using a standard routing protocol, because that is what the routers would support. BGP is a reasonable choice for this because its interoperability between modern implementations is excellent. The optimization system would peer with the routers, periodically recompute the desired behavior, and export those choices as the best route to destinations. To avoid having the Optimizer be a single point of failure able to bring down the entire network, the routers would retain peering connections with each other at a low weight as a fallback. The fallback routes would never be used so long as the Optimizer routes are present.

Until quite recently these systems had to be constructed using a standard routing protocol, because that is what the routers would support. BGP is a reasonable choice for this because its interoperability between modern implementations is excellent. The optimization system would peer with the routers, periodically recompute the desired behavior, and export those choices as the best route to destinations. To avoid having the Optimizer be a single point of failure able to bring down the entire network, the routers would retain peering connections with each other at a low weight as a fallback. The fallback routes would never be used so long as the Optimizer routes are present. Product support for software defined networking is

Product support for software defined networking is