Gigabit Ethernet transmits at 1 Gbps, yet if we watch the bits fly by on the wire there are actually 1.25 Gbps. Why is that?

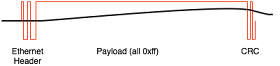

To see why, lets examine a hypothetical Ethernet which does send bits at exactly its link speed. A 0 in the packet is transmitted as a negative voltage on the wire, while a 1 bit is positive. Consider a packet where the payload is all 0xff.

The first problem is that with so many 1 bits, the voltage on the wire is held high for a very long period of time. This results in baseline wander, where the neutral voltage drifts up to find a new stable point.

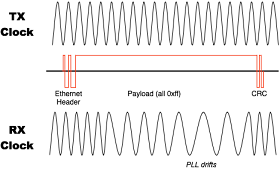

Even if we could control baseline wander, we have a more serious problem. There is no clock signal anywhere on the wire. The receiver recovers the clock using a Phase Locked Loop, which means it watches for bit transitions and uses them to infer the clock edges. If there are no transitions, the receive clock will start to drift. The illustration is exaggerated for clarity.

Enter 8B10B

Ethernet uses 8B10B encoding to solve these issues. Each byte is expanded into a 10 bit symbol. Only a subset of the 1024 possible symbols are used, those for which:

- over time, the number of ones and zeros are equal

- there are sufficient bit transitions to keep the PLL locked

Wikipedia has an excellent description of how 8b10b works, in considerable detail.

8b10b is used for Ethernet up to 1 Gbps. For 10 Gigabits, the 25% overhead of 8b10b was too expensive as it would have resulted in a 12.5 GHz clock. At those frequencies an extra gigahertz or two really hurts. Instead 10G Ethernet relies on a 64b66b code, which sounds similar but actually operates using a completely different principle. That will be a topic for another day.