Last week Andy Firth published The Demise of the Low Level Programmer, expressing dismay over the lack of low level systems knowledge displayed by younger engineers in the console game programming field. Andy's particular concerns deal with proper use of floating versus fixed point numbers, CPU cache behavior and branch prediction, bit manipulation, etc.

I have to admit a certain sympathy for this position. I've focussed on low level issues for much of my career. As I'm not in the games space, the specific topics I would offer differ somewhat: cache coherency with I/O, and page coloring, for example. Nonetheless, I feel a certain solidarity.

Yet I don't recall those topics being taught in school. I had classes which covered operating systems and virtual memory, but distinctly remember being shocked at the complications the first time I encountered a system which mandated page coloring. Similarly though I had a class on assembly programming, by the time I actually needed to work at that level I had to learn new instruction sets and many techniques.

In my experience at least, schools never did teach such topics. This stuff is learned by doing, as part of a project or on the job. The difference now is that fewer programmers are learning it. Its not because programmers are getting worse. I interview a lot of young engineers, their caliber is as high as I have ever experienced. It is simply that computing has grown a great deal in 20 years, there are a lot more topics available to learn, and frankly the cutting edge stuff has moved on. Even in the gaming space which spurred Andy's original article, big chunks of the market have been completely transformed. Twenty years ago casual gaming meant Game Boy, an environment so constrained that heroic optimization efforts were required. Now casual gaming means web based games on social networks. The relevant skill set has changed.

I'm sure Andy Firth is aware of the changes in the industry. Its simply that we have a tendency to assume that markets where there is a lot of money being made will inevitably attract new engineers, and so there should be a steady supply of new low level programmers for consoles. Unfortunately I don't believe that is true. Markets which are making lots of money don't attract young engineers. Markets which are perceived to be growing do, and other parts of the gaming market are perceived to be growing faster.

Page Coloring

Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the Wikipedia page, which seems to have paraphrased a FreeBSD writeup describing page coloring as a performance issue. In some CPUs, albeit not current mainstream CPUs, coloring isn't just a performance issue. It is essential for correct operation.

Because I brought it up earlier, we'll conclude with a discussion of page coloring. I am not satisfied with the Wikipedia page, which seems to have paraphrased a FreeBSD writeup describing page coloring as a performance issue. In some CPUs, albeit not current mainstream CPUs, coloring isn't just a performance issue. It is essential for correct operation.

Cache Index

Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line.

Before fetching a value from memory the CPU consults its cache. The least significant bits of the desired address are an offset into the cache line, generally 4, 5, or 6 bits for a 16/32/64 byte cache line.

The next few bits of the address are an index to select the cache line. It the cache has 1024 entries, then ten bits would be used as the index. Things get a bit more complicated here due to set associativity, which lets entries occupy several different locations to improve utilization. A two way set associative cache of 1024 entries would take 9 bits from the address and then check two possible locations. A four way set associative cache would use 8 bits. Etc.

Page tag

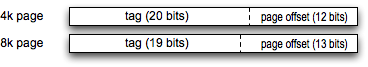

Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

Separately, the CPU defines a page size for the virtual memory system. 4 and 8 Kilobytes are common. The least significant bits of the address are the offset within the page, 12 or 13 bits for 4 or 8 K respectively. The most significant bits are a page number, used by the CPU cache as a tag. The hardware fetches the tag of the selected cache lines to check against the upper bits of the desired address. If they match, it is a cache hit and no access to DRAM is needed.

To reiterate: the tag is not the remaining bits of the address above the index and offset. The bits to be used for the tag are determined by the page size, and not directly tied to the details of the CPU cache indexing.

Virtual versus Physical

In the initial few stages of processing the load instruction the CPU has only the virtual address of the desired memory location. It will look up the virtual address in its TLB to get the physical address, but using the virtual address to access the cache is a performance win: the cache lookup can start earlier in the CPU pipeline. Its especially advantageous to use the virtual address for the cache index, as that processing happens earlier.

The tag is almost always taken from the physical address. Virtual tagging complicates shared memory across processes: the same physical page would have to be mapped at the same virtual address in all processes. That is an essentially impossible requirement to put on a VM system. Tag comparison happens later in the CPU pipeline, when the physical address will likely be available anyway, so it is (almost) universally taken from the physical address.

This is where page coloring comes into the picture.

Virtually Indexed, Physically Tagged

From everything described above, the size of the page tag is independent of the size of the cache index and offset. They are separate decisions, and frankly the page size is generally mandated. It is kept the same for all CPUs in a given architectural family even as they vary their cache implementations.

Consider then, the impact of a series of design choices:

- 32 bit CPU architecture

- 64 byte cache line: 6 bits of cache line offset

- 8K page size: 19 bits of page tag, 13 bits of page offset

- 512 entries in the L1 cache, direct mapped. 9 bits of cache index.

- virtual indexing, for a shorter CPU pipeline. Physical tagging.

- write back

What does this mean? It means the lower 15 bits of the virtual address and the upper 19 bits of the physical address are referenced while looking up items in the cache. Two of the bits overlap between the virtual and physical addresses. Those two bits are the page color. For proper operation, this CPU requires that all processes which map in a particular page do so at the same color. Though in theory the page could be any color so long as all mappings are the same, in practice the virtual color bits are set the same as the underlying physical page.

The impact of not enforcing page coloring is dire. A write in one process will be stored in one cache line, a read from another process will access a different cache line.

Page coloring like this places quite a burden on the VM system, and one which would be difficult to retrofit into an existing VM implementation. OS developers would push back against new CPUs which proposed to require coloring, and you used to see CPU designs making fairly odd tradeoffs in their L1 cache because of it. HP PA-RISC used a very small (but extremely fast) L1 cache. I think they did this to use direct mapped virtual indexing without needing page coloring. There were CPUs with really insane levels of set associativity in the L1 cache, 8 way or even 16 way. This reduced the number of index bits to the point where a virtual index wouldn't require coloring.